Ninety-one percent of Internet users feel they have “lost control” of their personal information, Pew Research Center found in a 2016 poll.[1] The exponential increase in the capacity of firms to collect, store, and analyze data raises significant privacy concerns. But the most significant challenge for policy makers is not the risk that personal data will be misused. Instead, it is the fact that there is no common understanding between consumers, firms, or regulators about the extent or the nature of this risk.

Consumers feel that they have lost control because they do not clearly understand how and when firms collect and use their personal information. For their part, firms and regulators do not have a clear appreciation of digital consumers’ privacy preferences. This situation damages both consumer and commercial interests. Consumers risk unwittingly exposing themselves to privacy violations, while firms face regulatory uncertainty and struggle to win consumer trust.

For these reasons, we need privacy laws and policies fit for the digital age. A necessary first step in privacy reform is to improve privacy disclosure. Specifically, the government should mandate digital “Privacy Labels.” Privacy labeling laws will give consumers more control over their personal data and, by enabling consumers to make informed decisions, help firms and regulators to better understand consumer privacy preferences. Consequently, such laws will also set the conditions for more informed debate and evidence-based privacy regulation.

Technological Capacity

More personal data is being generated and collected than ever before. Three technological forces drive the growth: (1) increased data processing power, (2) the economic value of bigger networks, and (3) the cost effectiveness of storing information in “the cloud.” Moore’s law—which suggests that the processing power of computers doubles every two years—incentivizes firms to collect vast amounts of data and provides the power to analyze and monetize them. At the same time, Metcalfe’s law—which shows the exponential value to a network of each additional user and device—ensures that digital connectivity continues to expand across traditional and new platforms, such as the Internet of Things. Between 2003 and 2010, the world’s number of connected devices grew from 500 million to 12.5 billion and is predicted to reach 50 billion in 2020.[2] Axiomatically, the more people and devices (from cars to fridges) that connect to the Internet, the more data there are to collect. Finally, the emergence of cloud computing—hailed by Microsoft and others as the “Fourth Industrial Revolution”—permits storage of an unprecedented amount of data, which are often technologically unfeasible or cost prohibitive to delete.[3]

Privacy Regulation

Despite the obvious privacy risks of mass data collection and storage, policy makers seem uncertain how to proceed. This uncertainty stems in part from the amorphous nature of privacy itself. As defined by Alan Westin, a privacy law pioneer, privacy is the ability of individuals “to choose freely under what circumstances and to what extent they will expose themselves, their attitude, and their behavior to others.”[4] Thus by definition, privacy is subjective. Individuals value their privacy differently, and the extent to which they choose to expose personal details is highly context dependent.[5] Consumers can also trade or otherwise relinquish their privacy rights. Indeed, respect for autonomy—said to be the root of privacy—entails not only the freedom to keep personal data private but also the freedom to disclose it. While we know that privacy preferences vary depending on the individual and the context, there is insufficient evidence to assess the scope of these preferences.[6] Regulators, therefore, do not yet have a clear understanding of what privacy choices they should be protecting.

Systemic Information Asymmetry

The current privacy regulatory regime complicates assessment of consumer privacy expectations since it incentivizes information asymmetry between firms and consumers. The United States does not have a central privacy protection authority or comprehensive privacy law. Instead, firms’ privacy obligations depend on the sector and state they operate in and on the type of information they collect. The main way organizations have responded to privacy concerns is by posting privacy policies (PPs) to their websites, which purport to notify users about the website’s data collection, use, disclosure, and security practices. Most firms are not legally obliged to post a PP.[7] However, virtually all now do (compared to only 2 percent in 1998).[8] While this development might appear to be an admirable example of industry self-regulation, it is best characterized as self-protection. US privacy regulation focuses on ensuring that users receive notice of and consent to uses of their personal data. Consequently, firms typically produce PPs that are verbose, jargon-cluttered, and even internally inconsistent.[9] This type of drafting maximizes a firm’s ability to argue that it notified consumers of objectionable data practices. While the Federal Trade Commission (FTC) can charge organizations that violate their own PPs, the more complex and ambiguous the PP, the less likely an FTC action will succeed.[10]

The use of PPs as the predominant vehicle for informing consumers about privacy issues means that consumers often lack the ability to make informed choices about how and when to share their personal information. Unsurprisingly, most digital consumers do not even attempt to read PPs.[11] Indeed, even the most diligent consumer could not read every PP she came across. Researchers Lorrie Cranor and Aleecia McDonald estimate that this task would take around seventy-six days every year.[12] Further, for consumers who attempt to read a PP, its ambiguity might nudge them toward complacency. As legal scholar Cass Sunstein has detailed, a complex or vague disclosure document can cause recipients to be indifferent to the risks it informs them about.[13] This behavioral effect further muddies analysis of consumer preferences and what they would consent to if fully informed.

Information asymmetry also harms commercial interests. Microsoft has lamented that firms that do not have transparent data practices are undermining industry trust.[14] Consumer distrust leads to suboptimal economic outcomes. A World Economic Forum report warned that more transparent privacy disclosure is needed to unlock the economic potential of big data.[15] Given the unprecedented economic and scientific possibilities of big data,[16] both industry and governments share an interest in privacy regulation that (re)builds consumer trust.

Reform Options

The failings of the voluntary PP regime have prompted a number of reform proposals. These can be broadly categorized into two groups: those that advocate limiting data “collection”, and those that focus on restricting its “use.” Consumer advocates have historically argued that personal information should be collected and stored only for specific, identified purposes, a concept codified in the FTC’s Fair Information Practice Principles of 1973.[17] However, limiting data collection is not necessarily practical in the age of big data. The value of large datasets comes from using them in unexpected and innovative ways to find previously unanticipated patterns.[18]

A second group of reform proposals, led by the President’s Council of Advisors on Science and Technology (PCAST), attempts to balance innovation and privacy by instead focusing on regulating data use.[19] However, policies that shift the focus from collection to use disempower consumers, leaving decisions as to what constitutes acceptable uses of their data to bureaucrats or firms. By diminishing consumers’ rights to control which information is collected in the first place, policies that focus on use also deprive consumers of autonomy—the very essence of privacy.

Introduce Privacy Labels to the Digital Arena

Rather than shifting the focus of privacy regulation from data collection to use, the focus should be on improving disclosure at the stage of data collection. Specifically, legislation should require privacy labels to be conspicuously displayed on digital products and services operated by US organizations or sold in the United States. A privacy label can be thought of as a summary of the most salient, privacy-related information about the relevant product or service—be it a website, app, or Internet-enabled device.

Governments have mandated information labels in other domains—for example to show the health risks of smoking, nutritional content of packaged food, and energy efficiency of household appliances. Introducing privacy labels to the digital arena could empower consumers to strike bargains with firms that are consistent with their privacy preferences. Recognizing that people value privacy differently, privacy labels permit consumers to exercise informed consent on a case-by-case basis. They also avoid the need for heavy-handed government intervention that might fetter innovation, for example by blocking a priori transactions where data is collected for unspecified or unlimited purposes.

Legal Framework

Privacy label legislation should empower a government agency such as the FTC to prescribe requirements for the form and content of labels and to enforce these requirements. The same agency should also be given legislative responsibility for researching and designing effective labels, sensitive to technological restraints and industry and consumer needs. In effect, the responsible agency should create a mandatory template that would specify minimum form and content requirements for labels while leaving certain details up to individual firms. There is precedent for this type of legislative disclosure regime. For example, the Dodd–Frank Act authorizes the Consumer Financial Protection Bureau to issue rules to ensure financial consumers are “provided with timely and understandable information to make responsible decisions about financial transactions.”[20]

Guiding Principles

The mandatory privacy label template should be informed by principles of effective disclosure and regulatory best practice. As is demonstrated by the confusion PPs currently cause for consumers, the mere fact of disclosure does not guarantee that it will be effective. Instead, the empirical research demonstrates that effective disclosure policies need to be based on an understanding of how real people process information. Disclosure documents must be “concrete, straightforward, simple, meaningful, timely, and salient,” as Cass Sunstein has explained.[21] There should also be conspicuous disclosure of both the risks and benefits of a product or service. These principles have been incorporated into other agencies’ disclosure regimes—for example, the US Treasury Department has made clear that, in relation to consumer financial disclosure statements, technical compliance with the law is insufficient; statements must be “reasonable,” in the sense that they are understandable and non-deceptive to the average consumer.[22]

Another important element of disclosure regimes is that they must facilitate comparison and market competition, so that consumers can shop for the product or service that best meets their privacy expectations. Accordingly, while different templates will likely be needed for different platform types—for example apps, sites, and physical devices—the appearance and content of privacy labels should be as consistent as possible. The label should also be displayed at a time that makes sense as part of the user experience so that consumers have fair opportunity to digest the information. To test whether a proposed template is consistent with these principles, it is recommended that the FTC conduct empirical tests including randomized experiments.

Content Requirements

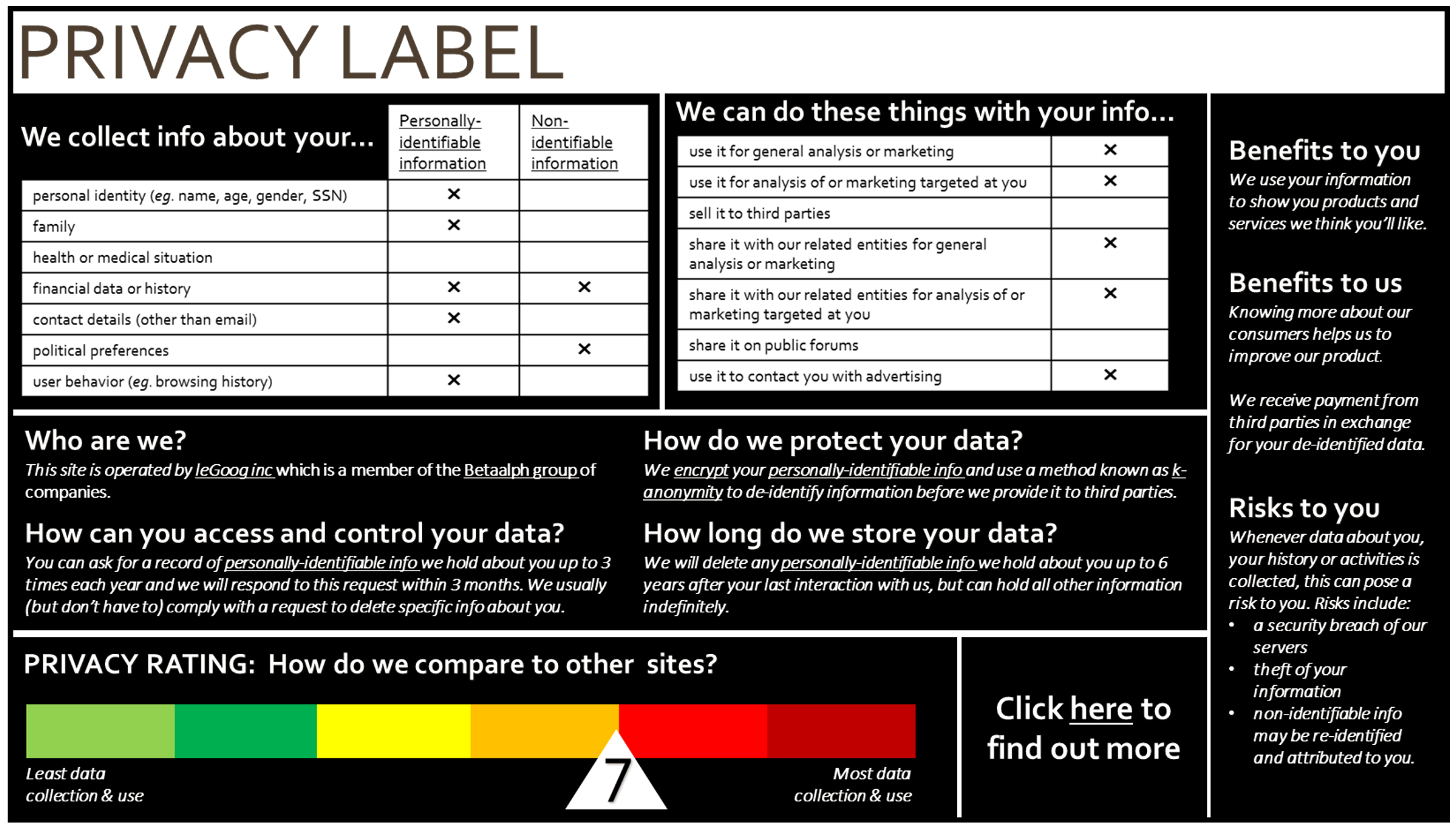

Labeling laws should require firms to disclose the most important privacy-related information to consumers. The Appendix imagines what this might look like. Most importantly, the label should include information about the types of data that will be collected and the way the organization will use these data, including how they will be stored and analyzed and who they might be shared with. A distinction should be drawn between personally identifiable and non-personally identifiable data, as it is likely that consumer sensitivity to privacy will differ between these categories. Further, the privacy label should display the benefits of data collection—for both consumers and the organization itself—together with key risks (such as fraud and theft) and the strategies for mitigating them. To make the information contained in the privacy label more salient to users, it would be helpful for the responsible agency to develop a ratings system so that consumers can see a graphic display of how much data a site or product collects in comparison to others. The label might also summarize any rights of the consumer to access, alter, or delete their information. Finally, the label could hyperlink to additional information, such as the full PP or definitions of more technical words.

Display

Privacy labels should be graphically displayed to consumers. This is different to proposals that use privacy-enabling technology to make the information contained in PPs more transparent to consumers, such as the Platform for Privacy Preferences Protocol (P3P).[23] P3P allows users to input data collection and use preferences, and it alerts them or takes actions like blocking cookies if they encounter a site that does not conform to these preferences.[24] However, as critics of P3P have observed, “the existence of P3P technology does not ensure its use.”[25] Without a legislative obligation, widespread adoption of privacy-enabling technology is unlikely.

Moreover, by minimizing human decision making, there is a risk that P3P and similar proposals will result in a “set and forget” mentality. Behavioral science tells us that “vivid, salient, and novel presentations may trigger attention in ways that abstract or familiar ones cannot.”[26] Having a machine negotiate privacy rights behind the scenes is anything but vivid to the end user. By contrast, a conspicuously displayed privacy label explicitly confronts users with the privacy implications of the products and services they are using. Privacy labels will be most effective if they empower end users, rather than machines, to make informed decisions about their privacy.

Political Feasibility

A privacy disclosure regime can be framed as beneficial not just to consumers but to commercial interests. Privacy labels could reduce regulatory uncertainty and consumer distrust, which, as discussed above, have real economic consequences for firms. They could also open a new avenue for digital firms to compete on, namely their privacy offerings. Further, research suggests that consumers are less concerned about their privacy (at least vis-à-vis corporations, if not the government) than advocates believe.[27] As consumers’ lives become more digitized, it is plausible that they will be more willing to trade personal information for customized services.[28] If, after labeling laws are implemented, consumers give informed consent to significant information disclosures, this could be used as evidence to dissuade further government intervention. Privacy labels could therefore be a win-win reform for consumers and industry.

Conclusion

Admittedly, significant work is required to take the idea of privacy labels from concept to implementation. This article has not considered the costs—to government or to firms—of such a policy. Nor does it provide a detailed catalog of which products, services, and firms labeling laws should apply to. Despite these challenges, the opportunity to introduce mandatory privacy labels should not be missed. The United States is arguably approaching a tipping point on privacy reform, created by rapid technological change, declining consumer trust, and growing pressure on the US government to respond to both. There is simply not enough information—about what privacy preferences consumers have and about how organizations use consumers’ data—upon which to base good reform. The way that the market and the law have dealt with privacy concerns to date, via PPs, has only exacerbated this uncertainty. Mandatory privacy labels could help solve this problem by empowering consumers to make informed decisions based on information that is understandable, meaningful, and comparable across platforms.

APPENDIX: Sample Privacy Label[29]

PHOTO CREDIT: Frédéric Poirot via Flickr

[1] Lee Rainie, “The State of Privacy in Post-Snowden America,” Pew Research Center, 21 September 2016, http://www.pewresearch.org/fact-tank/2016/09/21/the-state-of-privacy-in-america/.

[2] Dave Evans, The Internet of Things: How the Next Evolution of the Internet Is Changing Everything (Cisco Internet Business Solutions Group, 2011), 3.

[3] Microsoft, “A Cloud for Global Good: A Policy Roadmap for a Trusted, Responsible, and Inclusive Cloud,” 2016, accessed 11 December 2016, https://news.microsoft.com/cloudforgood/_media/downloads/a-cloud-for-global-good-english.pdf.

[4] Alan Westin, Privacy and Freedom (New York: Athenum, 1967), 7.

[5] Lawrence Lessig, Code: Version 2.0 (New York: Basic Books, 2006), 226.

[6] “Rethinking Personal Data: Trust and Context in User-Centered Data Ecosystems,” World Economic Forum, 2014, accessed 11 December 2016, http://www3.weforum.org/docs/WEF_RethinkingPersonalData_TrustandContext_Report_2014.pdf.

[7] Firms in specific sectors, notably finance and health, do however have obligations to disclose certain privacy-related information. See Gramm–Leach–Bliley Act of 1999, Pub.L. 106–102, 113 Stat. 1338 (1999); Fair Credit Reporting Act of 1970, 15 U.S.C. § 1681 (1970); Health Insurance Portability and Accountability Act of 1996, Pub.L. 104–191, 110 Stat. 1936 (1996).

[8] Allyson Haynes, “Online Privacy Policies: Contracting Away Control over Personal Information?” Penn State Law Review 111, no. 3 (2007): 593.

[9] “Privacy Online: Fair Information Practices in the Electronic Marketplace,” Federal Trade Commission, 2000, accessed December 11, 2016, https://www.ftc.gov/sites/default/files/documents/reports/privacy-online-fair-information-practices-electronic-marketplace-federal-trade-commission-report/privacy2000text.pdf.

[10] Haynes, “Online Privacy Policies,” 603.

[11] Ibid., 587.

[12] Alexis Madrigal, “Reading the Privacy Policies You Encounter Would Take 76 Work Days,” Atlantic, 1 March 2012.

[13] Cass Sunstein, “Empirically Informed Regulation,” The University of Chicago Law Review 78, no.4 (2011): 1349.

[14] Microsoft, “A Cloud for Global Good,” 17.

[15] “Rethinking Personal Data.”

[16] See e.g., President’s Council of Advisors on Science and Technology, “Big Data: A Technological Perspective,” Executive Office of the President, May 2014, accessed February 17, 2016, https://obamawhitehouse.archives.gov/sites/default/files/microsites/ostp/PCAST/pcast_big_data_and_privacy_-_may_2014.pdf.

[17] See e.g., FTC Report, “Protecting Consumer Privacy in an Era of Rapid Change: Recommendations for Businesses and Policymakers,” Federal Trade Commission, March 2012, accessed December 11, 2016, https://www.ftc.gov/sites/default/files/documents/reports/federal-trade-commission-report-protecting-consumer-privacy-era-rapid-change-recommendations/120326privacyreport.pdf.

[18] Thomas Lenard and Paul Rubin, “Big Data, Privacy and the Familiar Solutions,” Technology Policy Institute, May 2014. See also John Podesta, et al., “Big Data: Seizing Opportunities, Preserving Values,” Executive Office of the President, May 2014, accessed February 17, 2016, https://obamawhitehouse.archives.gov/sites/default/files/docs/big_data_privacy_report_5.1.14_final_print.pdf.

[19] President’s Council of Advisors on Science and Technology, “Big Data,” recommendation 1.

[20] The Dodd-Frank Act of 2010, Pub.L. 111–203, H.R. 4173, § 1021.

[21] Sunstein, “Empirically Informed Regulation,” 1369.

[22] Department of the Treasury, “Financial Regulatory Reform: A New Foundation,” 2006, accessed December 11, 2016, https://www.treasury.gov/initiatives/Documents/FinalReport_web.pdf, 64.

[23] See e.g. Lessig, Code, 232.

[24] Lorrie Cranor, et al., “P3P Deployment on Websites,” Electronic Commerce Research and Applications 7, no. 3 (2008): 274–93.

[25] William McGeveran, “Programmed Privacy Promises: P3P & Web Privacy Law,” NYU Law Review 76, no.6 (2001): 1846.

[26] Sunstein, “Empirically Informed Regulation,” 1354.

[27] Humphrey Taylor, “Most People Are ‘Privacy Pragmatists’ Who, While Concerned about Privacy, Will Sometimes Trade It Off for Other Benefits,” The Harris Poll 17 (2003).

[28] Heather Kelly, “Figuring out the Future of Online Privacy,” CNN Online, 28 February 2013.

[29] The information categories used in this sample graphic were informed by those used in Cranor, et al., “P3P Deployment on Websites.”