BY JESSICA “ZHANNA” MALEKOS SMITH

As the U.S., the U.K., Russia, China, South Korea, and Israel begin developing fully autonomous weapons (FAW) systems, the issue of state responsibility for such systems remains undeveloped. In fact, the term “state responsibility” did not even appear in the United Nation’s Group of Governmental Experts Chair’s summary of the latest discussions on lethal autonomous weapons systems. To better strategize how to mitigate state conflict arising from this technology, policymakers should be preparing for FAWs now, by establishing a legal framework of State-in-the-Loop responsibility.

There is a clear and demonstrable need for developing state accountability principles for FAWs. In fact, the NGO coalition Campaign to Stop Killer Robots advocates a total ban on “killer robots” in part because there is no accountability: “Without accountability, [military personnel, programmers, manufacturers, and other parties involved with FAWs] would have less incentive to ensure robots did not endanger civilians[,] and victims would be left unsatisfied that someone was punished for the harm they experienced.” A legal framework can help allay those concerns and would signal international commitment to the principles of the law of armed conflict.

The advantages of engineering a legal framework of State-in-the-Loop responsibility are twofold: (1) It would promote compliance with the jus in bello (law of war) principles of distinction, proportionality, necessity, and reducing unnecessary suffering; and (2) it would protect the flame of technological innovation and enterprise—a flame that might very well be extinguished under an outright ban.

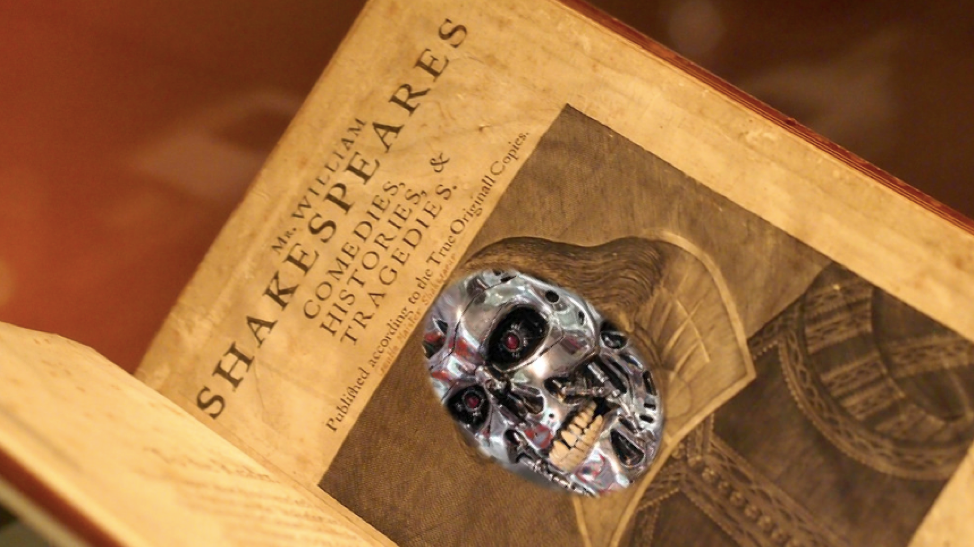

The next UN Group of Governmental Experts (UN GGE) meeting on lethal autonomous weapons systems will take place in Geneva in August 2018. This is a ripe opportunity for a discussion of implementing a State-in-the-Loop legal framework. And naturally, because this article champions the call for legal action, one can jokingly imagine why a “killer robot’s” first words might be: “The first thing we do, let’s kill all the lawyers.”

Shakespeare jokes aside, what are FAWs?

By definition, fully autonomous weapons systems do not depend on human input to function, but instead operate under control algorithms designed by system operators. In Human Rights Watch’s report Losing Humanity: The Case Against Killer Robots, unmanned robotic weapons are categorized according to three different levels of interface between human and machine: (1) Human-in-the-Loop weapons, which are “[r]obots that can select targets and deliver force only with a human command”; (2) Human-on-the-Loop weapons, which are “[r]obots that can select targets and deliver force under the oversight of a human operator who can override the robots’ actions,” and (3) Human-out-of-the-Loop weapons, which are FAWs.

State-in-the-Loop responsibility for FAWs

While liability for the physical harm caused by an FAW may extend to parties involved in its development, design, integration, and deployment, the state employing the technology should ultimately be held responsible. Lending support to this concept is the Martens Clause of 1899. This clause promotes imposing legal and political responsibility on states when the rules and customs surrounding armed conflict are undergoing development. Even in transition periods, like this current period in which we are developing FAWs, the principles of international humanitarian law still protect individuals.

The Tallinn Manual on the International Law Applicable to Cyber Operations also lends guidance here in how it reinforces the concept of state responsibility in cyberspace. I propose that the UN GGE overlay the Tallinn Manual’s articulation of state responsibility with FAWs. If the Tallinn Manual’s criteria for state responsibility for cyber warfare were co-opted to apply to FAWs, states would be responsible for the acts committed by FAWs under the following two conditions:

- When the act of an FAW in question is attributable to the State under international law; and

- When the FAW’s act constitutes a breach of an international legal obligation applicable to the States.

An example of this would be if an FAW were to malfunction and harm, or kill, innocent civilians in a foreign combat environment (i.e., a breach of an international legal obligation), then (1) the act would be attributed to the state employing the technology, and (2) the state would be held accountable for breaching an international legal obligation to not harm innocent civilians.

FAWs necessitate more State-in-the-Loop accountability and oversight because of the heightened risk that these weapons could potentially engage in the indiscriminate killing or wounding of civilians. Simply put, the more we remove the human operator from performing oversight of weapons systems, the more we should “loop in” the state to bear ultimate responsibility.

In November 2017, the 125 state parties to the 1980 Convention on Certain Conventional Weapons (CCW) met for their annual meeting in Geneva and continued their discussion from 2016 on how to regulate lethal autonomous weapons. Although the CCW does not explicitly address FAW technology, the argument can be made that it still encompasses FAWs by restricting the use of weapons that may cause unnecessary suffering and indiscriminately kill or wound civilians. While the 2017 assembly of UN GGE did not reach a consensus on regulating FAWs, the next UN GGE meeting is scheduled to take place in August 2018. If the opportunity is seized, this forum could be a fruitful opening discussion on State-in-the-Loop responsibility. Attendees of the meeting should strive to chart a global policy that overlays the doctrine of state responsibility with FAWs. This kind of responsibility strikes the right balance between promoting responsibility and protecting innovation.

Another option: An outright ban on FAWs?

American inventor Thomas Edison famously remarked, “Many inventions are not suitable for the people at large because of their carelessness. Before a thing can be marketed to the masses, it must be made practically fool-proof.” Are FAWs “suitable” for states to use, or will they lead to unnecessary suffering, in violation of jus in bello? Human Rights Watch posits that humans are better equipped to make value-based judgment decisions because “robots would not be restrained by human emotions and the capacity for compassion, which can provide an important check on the killing of civilians.” But Michael Schmitta professor of law at the U.S. Naval War College, offers a counter-argument to this by reasoning that although “human perception of human activity can enhance identification in some circumstances, human-operated systems already frequently engage targets without the benefit of the emotional sensitivity cited by [Human Rights Watch].”

While the proponents of a total ban on FAWs may have good intentions, a broad mandate to prohibit this technology is not advisable because it fails to take into consideration the national security benefits that would be discarded. According to robotics expert Peter Singer, in military contexts robots are better suited for the “‘three D’s’—tasks that are dull, dirty, or dangerous.” Using FAWs to accomplish tasks involving Singer’s three D’s can help save the lives of military personnel and civilians, by reducing their exposure to certain deadly risks. Ultimately, the support for employing FAWs must be conditioned on their potential to mitigate suffering in war and the international community’s ability to abide by state responsibility.

Lastly, on a philosophical level, another concern about FAWs is that they lack empathy and cannot reason like human beings in evaluating whether it is ethical and legal to take a life. FAWs’ decision-making processes are not encumbered with fear, anxiety, or pain; however, human operators of unmanned aerial combat vehicles (i.e., drones) routinely grapple with ethical uncertainties both during and after targeting operations. For example, in his memoir, Drone Warrior, Brett Velicovich writes vividly about “second guesses” that crept up in his mind after each operation. Do “second guesses” indicate a higher capacity for moral reasoning? Even if so, this kind of critique of artificial intelligence (AI) may only capture the current state of technology. The story of AI is just beginning, and robots may prove to have the same emotional capacity as humans.

We cannot afford to become “robotic” in our approach to addressing state responsibility. Now is the time for responsibly engineering international legal guidelines for fully autonomous weapons.

Edited by Parker White

Header photo credit Sgt Wes Calder RLC // Body photo credit Wikimedia Commons and Swamibu Flickr (modified by the author)